DPUs, GPUs, and CPUs in the Data Center

- Workload Acceleration - HPC

The Case for Diversification

Data Processing Units (DPUs) have become unavoidable in data centers running AI and HPC workloads. General purpose CPUs have hit their physical limitations as they can only support single threaded user applications. GPUs were not designed for dealing with multiple workloads and their data efficiently. DPUs enable a new class of accelerated processing to efficiently run heterogeneous data-centric processing tasks and offload GPUs and CPUs.

Novel DPUs prioritize efficiency, addressing specific data processing needs effectively. By offloading data services, networking, or compute tasks, DPUs enhance overall data center performance and reduce power consumption. Their programmability enables adaptation to diverse workloads, crucial in heterogeneous data center architectures.

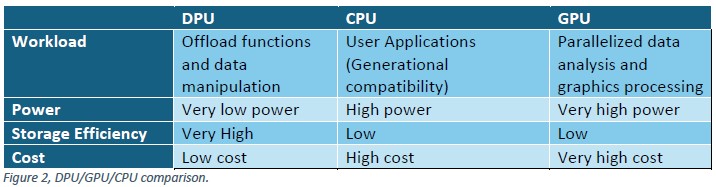

With specialized low-power cores, coprocessors, and high-speed interfaces, DPUs streamline data processing operations and ensure seamless connectivity with other data center components. Given any workload (or architecture) DPUs, CPUs and GPUs can be assessed against each other based on the sum of these factors while assessing the overall business decision tree.

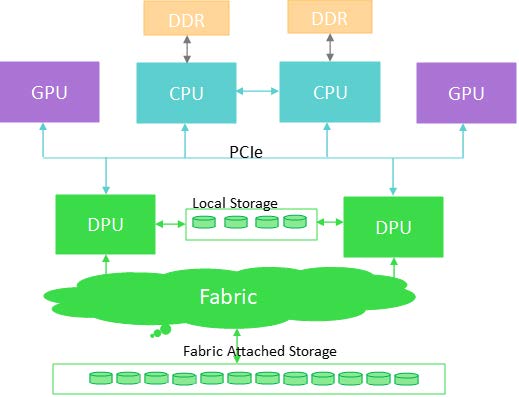

The ideal data center has all three processor types working in harmony, as each processor category is excellent at its job and shouldn’t be replaced. In many data centers today, only two types of processors are doing all the work. Adding a third processor (DPU) offloads mismatched tasks from CPU and GPU to perform the work more efficiently. DPUs can be inserted into architectures alongside the other two processors to pick up all data management – protection, security, and reduction (see Figure 1). This architecture is just one example; the DPU can easily perform the same functions on the other end of the fabric.

DPU vs GPU vs CPU

Let’s discuss the relative strengths of the three types of processors – DPU, CPU, and GPU. Figure 2 shows key differences in the three processor categories which contribute to storage efficiency. The process capability differences can be described using a horse analogy.

CPU – The Workhorse

The CPU has been around for decades and, as a result, has had to remain generic so that it can be both forward and backward compatible across the great number of applications that it must support. The CPU is the workhorse that day after day plows the fields of the hundreds of thousands of applications that rely on it. It’s expensive and hungry, and its strength is in its sameness, generation after generation.

All the world’s programming tools are built around this sameness. But because of its strength, it’s not efficient for pure data-processing-related tasks. Until a few years ago, it was the only real processing game in town, and it bloated into a very expensive and power-hungry beast.

GPU – The Thoroughbred

GPUs, however, are specialized processors for video and graphics data. They are more expensive than CPUs and very, very power-hungry.

In our horse analogy, they would be the thoroughbred racehorse. They do one thing incredibly well but have poor data management metrics. And just as a racehorse is temperamental, GPUs have historically struggled to adapt to tasks outside of graphics processing because they are difficult to program. To be fair, this impediment is changing but GPUs simply aren’t built to handle day-to-day storage services.

DPU – The Pony Express

Lean and fast, with the high endurance needed to travel hundreds of miles over varying terrain, Pony Express ponies were purposely chosen for service because they had the exact traits needed for the job. The DPU processor is a low-power, low-cost, purpose-built processing unit that handles data processing far more efficiently than its cousins. It’s not going to plow fields nor win the Kentucky Derby, but it does what it does incredibly well.

Better Together

CPUs, DPUs and GPUs each play a vital role in the data center and should be considered peers which perform functions according to their strengths.

The CPU will always perform the heavy lifting of running user applications, VMs, and containers. The GPU will be the most efficient at handling heavy parallelized computations. The DPU is the glue between them, carrying out DPU network, DPU security, and DPU storage functions including data manipulation, which neither the CPU nor GPU does well.

A DPU Processor Improves Performance and Lowers TCO in the Data Center

CPUs and GPUs have been around for a long time, and both serve a particular purpose in the data center, but neither one really contributes to Total Cost of Ownership (TCO) efficiency by actually lowering cost or power utilization—they only contribute to data center TCO reduction by being efficient in the specific roles they play.

These processors are also very expensive, consume a lot of power, and constitute a wasted resource when deployed against jobs they aren’t equipped for. Consider a technician performing work with an outdated toolbox. If new tools exist that will save that technician considerable time and effort, it’s in the technician’s best interest to adopt the new tool to increase his productivity. Same goes for the DPU: we are simply introducing a new tool into the data center that should greatly increase storage efficiency.

When to Use a DPU

DPUs are in their element when they are: creating and managing RAID sets or Erasure Coded sets, encrypting/decrypting data, de-duping or compressing data, or managing the Quality of Service (QoS) of the data. These are among the tasks that they do best – and others do very poorly. When the CPU and GPU shed these tasks, they become more available for billable workloads.

DPU processors emerged organically to solve certain data processing needs. They emerged from the convergence of two needs, one being a local data processor for data analytics where a GPU is too heavy and the other arising from the need to make network Interface Cards (NICs) smarter by giving them programmability features.

As the role of the DPU expands into the role of storage offload, it became evident that, if architected carefully, it could process data more efficiently at lower power and cost in the data center than a traditional CPU or GPU. In large-scale data centers where storage growth is exponential, the efficiency of a DPU results in significant savings in both time and expense.

Explore Kalray DPU options for your data center and schedule a demo.

The cost and power savings are especially important in large scale data centers where storage growth is exponential. Like everything else on Mother Earth, the amount of power that a data center can consume is finite. As data centers seek ways to do more with less power, DPU utilization will become mandatory.

Next Up: Reduce TCO in the Data Center

Tim LIEBER

Lead Solutions Architect, Kalray

Tim Lieber is a Lead Solution Architect with Kalray working with product management and engineering. His role is to innovate product features utilizing the Kalray MPPA DPU to solve data center challenges with solutions which improve performance and meet aggressive TCO efficiency targets. Tim has been with Kalray for approximately 4 years. He has worked in the computing and storage industry for 40+ years in innovation, leadership and architectural roles.